SPICKETT.com

Welcome to the blog of theo spickett

Pinned Post!

Updating the Family Tree...06 Jan 2025

As a new-year's resolution, I decided to make some updates to the Family Tree. I realize there is not much more to be said about the recent generations

but I would like to research my paternal line past my great-grandfather - Arthur Edward Spickett and Jane Bailie Spickett (born: Maclean), as that is

currently as far back as I go.

I have decided to continue using www.myheritage.com even though it's a bit pricey - which is also why I would like to ask if any Spickett has a subscription

or are prepared to contribute to a subscription for me in order to maintain the tree propertly. A complete subscription will cost me $99.50 for a year which

is not too stiff but I told myself that if I got ONE other person that's prepared to share the cost with me, I will do it. So I am asking for up to $49 in

donations in order for me to make work of it.

In addition, what I will pledge to do is to craft a photo page of the South African chapter of Spicketts, starting with my Grandfather, Leslie Arthur Spickett.

I recently re-discovered the old family albums and must find the time to digitize them. An example of the patriarch of the South African chapter below.

Current re-read...

Thus spake Zarathustra...06 Jan 2025

I'm re-reading Thus spake Zarathustra by Friedrich Nietzsche. My first reading of it was obscure - trying to interpret it as a disciple of Zarathustra

would have. Not that I was very receptive to the narrative but I was curious as to how the first-readers might have been influenced by it. Needless to say

it was not a very constructive read and I suspect I lost investment along the way.

This time I wanted to read it with a complete outsider perspective - a critical reader. And it's turning out to be quite rewarding. Nietzsche is an intriguing

author to read. He surprises readers sometimes twice in the same sentence which leaves for an engaging intellectual activity. Of course, he completely

wipes the floor with conventional wisdom, but he does so with what almost feels like a sarcastic humor.

So I am going to create a Thus spake Zarathustra page and summarize some of the topics that he covers as I believe that there are some interesting and

valuable insights to be gleaned here. To be clear: I hardly ever personally agree with Nietzsche, and certainly do not endorse his world-views.

In fact, I think the man lived in a very special place in his head, and not so much in the world that we live in. But there are some topics that make me

want to write something about it as a "modern" reader out of the original context for which it was written. Some examples include (not a comprehensive list):

1. The concept of "God is dead", which is arguably the landmark concept that established Nietzsche as a philosopher.

2. The "Superman" that Zarathustra refers to - I believe there are parallels with today's rise of Artificial Intelligence. Certainly not a parallel that

he envisioned, but I feel that it fits the narrative far too well to be neglected an opinion or two.

3. The three metamorphoses being the Camel, Lion and Child.

4. Old and young women, with the famously laughable reference to the gift that the old woman gives him - being that "women should be whipped".

5. Voluntary death. It feels like Nietzsche paints one into a corner here: Should you believe in an afterlife, a voluntary death could be a

virtuous or even desirable thing. If you are a nihilist as Nietzsche certainly is himself, the value of life could often be seen as diminished to point of

meaninglessness. So how does one escape this corner.

6. Virtue as something that seems to become ever more grand but elusive, and ultimatley self-serving.

7. Popular wisdom, and how Nietzsche tends to play this off against "truth".

All of these topics will go into my Zarathustra page as a musing or a pastime for the next couple of weeks / months.

Into 2025!

It's that time of the year...28 Dec 2024

It is that time of the year - time for New Year's Resolutions and reflecting on the year behind us. 2024 was a tough year, and I am not saying that

lightly. There were some challenges well outside of my control that made progress, improvement and tracking goals almost impossible. That said, we are on

the other side of it - and whether we are out of the woods remains to be seen, but as they say - "keep going".

2025 is going to be a more grounded year. As much as I would like to list 10 things that I would like to achieve in 2025, I would be happy to just avoid as

much drama as possible. That does not mean that there are some outcomes that I would like to see, but if they were to become real, they will have to do so

slightly more organically and without deep expense of energy, effort and inspiration on my behalf. The Universe will need to coax them along and I shall be around for

the journey rather than be driving it.

Every year, I tend to pick a "project"...and in broad-strokes, I would classify them as an intellectual or physical project. It tells where the bulk of my

efforts will be made - and experience suggests it cannot always be a good balance of both. This past year was supposed to be more of a physical project, but

health necessarily forced it to become more of an intellectual year. I invested lots of time in training, up-skilling and ultimately investing deep efforts in

building a chess engine. I am happy with where that ended and I am going to park that project for now. Next year, I would like to just sit back and see where

the road leads. I almost want to say that I would like to rise above it a bit. I do want to read more again - that is perhaps the one thing that I will plan

deliberately.

And with that, I wish you all a Happy New Year and all the best that 2025 can bring.

Cycling - again?!

There's still some time left in August...22 Aug 2024

I noticed my post from almost a year ago where I was saying that August (in the southern hemisphere) is the month that nature seems to have a gentle way

to get you excited about exercising again. Everyone emerges from winter having done a little bit less (some form of pseudo hybernation) due to the cold

weather. But then August happens and it feels OK again to get up earlier and not have to put on 5 layers of clothing to brave the cold weather.

My intention about this time last year did not materialize due to some health challenges I had. The bicycle stayed behind on holiday and was really only

recently serviced again. The trip-computer may have been swallowed by the clutter of the house as it didn't move for a long enough time, so I am down

to using my cellphone as a trip computer for now. To be honest, it was due for an upgrade, and if I do ever find it again (which I am sure I will), I might

treat it with the same respect as all the other trinkets in the house that I hope will end up in a technology museum some day.

I did manage to get an opportunity to notch up a really nice hike out to an old friend - Giant's Castle in the Drakensberg. This time with the family. We

didn't summit (we didn't plan to) but we camped at one of the most awe-inspiring sites in the Drakensberg - looking up at the Giant's castle from the lower

berg plateau. Although it was sogging wet on the way up and down, the night was perfect with clear skies and no wind. It was VERY cold but we came prepared

and had the best sleep I have had in a very long time.

As we walked back past the base of the pass that goes up to the summit, my daughter made me promise that we would come back and summit. I will definitely

take her up on the challenge before the end of the year.

It's alive!

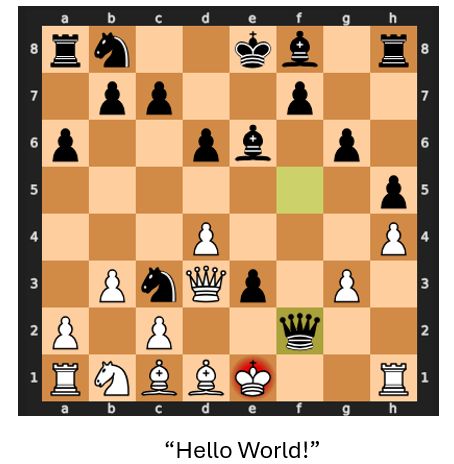

Update on my chess engine...21 Aug 2024

It's been so long since I last updated the site - but as they say: life happens. The chess engine has progressed, and here's the update.

The code allows for training-data creation by playing random legal moves and then committing the replay buffer to a database. I am using Teradata Express

running in a VirtualBox VM on my laptop (because I know Teradata quite well and am confident that I can scale it if I transfer the solution to a Cloud instance).

The random legal move replay-buffers allow me to calculate a discounted reward to be assigned (back-propagated) to the ply's based on a -1/0/+1 reward basis for a

black win, draw and white win respectively. In the database, I then create a table that aggregates the back-propagated values for positions that have occurred

multiple times. Next up, I then ask the algorithm to repeat the play against itself but this time I ask it to check if the board (state stored in FEN notation)

has been encountered previously. If it hasn't, it continues to pick a random move as previously, but if it finds the board (state), it uses the aggregate reward

to check if it wants to play the move (i.e. a reward greater 0 is picked on a white turn, and smaller than 0 is played on a black turn). All un-encounterd states

are assumed to have a reward of 0.

The reward of the first game that it played doing this is shown below. It was not a bad game considering that this was purely based on a search of historical states

and no trained Q-learning reward function yet. The games are shorter than random move games (which make sense), and the quality of the resulting replay buffers

improve.

Next up is the challenging bit: implementation of the Q-learning reward function. Keep in mind, I am not using a Convolutional Neural Network as is the original

implementation, but I believe I can simplify this using a one-hot-encoded matrix representing the FEN of the state. I am going to try to implement it in a VERY

small neural network - possibly 2-3 layers with 2-5 nodes each. Also, I am not teaching the model to play chess (I am not implementing a model-free engine) as I am

offering it legal moves using the Python chess library. So all it needs to do is pick good moves.

So far, my poor laptop has been suffering. I have only managed to play 10,000 games to build a Monte Carlo Tree of replay buffers, and have only been able to

build a training-data set of approx. 2 million boards / states. Then to propagate the reward using the discount (I am using 0,95 btw.) and writing them out to

a database as opposed to just using matrixes in-memory is tedious but rewarding in the long-run, because the alternative is using files to persist the replay

buffers and that is even worse. But it's a fun exercise and the laptop chugs away doing this mostly while I sleep, so no harm done there. I wish I had some

reasonable compute, but for now, the laptop is good enough to get the engine to work functionally. Eventually I will let it train with some decent capacity in the

Cloud.

Chess Engine

Update on my chess engine...14 Jan 2024

I have been working on my home-brewed chess engine. I am trying to implement the MuZero algorithm that is Google's improvement on the famous AlphaZero algorithm that is arguably the world's most

capable chess engine today. It is not nearly as elegant as I can imagine Google having done it - but I am doing it to figure out how it works. I am also not nearly done but I have been making

some progress. To date I have used the Python chess library as a rules engine - essentially to assist in generating visual representations

as well as provide a list of legal moves and board-metadata. I then use Python to simulate play in a Monte-Carlo like simulation and store the games as reply-buffers.

Next I would need to start building a dictionary of board positions with associated rewards so that I could start training a Tensorflow neural network to learn the Q(s,a) function. Once that is done,

I would need to implement the Monte-Carl Tree Search (MCTS) part of the algorithm that explores n-levels deep forward moves to aggregate the best Q* value and decide on a next move. The MCTS part of

the algorithm, albeit very powerful, requires loads of computational power so I may implement it and test a 1 or 2 level tree-search - but it would just be to see if I can get it to work.

Lastly, I would need to let it train. I don't have the super-capacity that a Google or Stockfish would have, so I would just like to train it to the point where it could consistenly beat a "random"

player over a series of 100 games. That would be proof enough for me that the algorithm has been implemented correctly and that the Q* function is learning.

If you would like to track my progress or even assist, check out my Github Page here.

Chess is more than chess.

Chess: Magic or Mathematics? 9 Dec 2023

Chess is fascinating. When I play recreationally, I am terrible (or at least below average)...which is most of the time. I have been familiarizing myself with some of the modern chess

algorithms as a cross-section between chess and deep-learning. There are many possible approaches to chess algorithms and the thinking behind these have evolved over time. I see five stages of

chess algorith evolution:

1 - When processing was scarce and expensive, there was a lot of hand-crafting of rules and policies. We tried to capture chess theory as best we could algorithmically and individual moves were evaluated

against a set of logical rules. This allowed engines to play a proper game, but experienced players were able to beat them with relative ease. I believe that this was the case because the true logical

complexity of chess cannot reasonably be captured algorithmically.

2 - As processing became more accessible, the engines incorporated history - specifically history of games played between human opponents. Building on the hand-crafted rules, it seems like we supported

the logic with some historical play that helped the algorithms "see" tactics that were not apparent to the hand-crafted logic. At this point, we started seeing engines present a real challenge to

expert human players - but they could still not consistently beat the best human players.

3 - Fast forward a couple of years and with processing now becoming abundantly available, it seems like we allowed engines to develop their own reference models for games with less reliance on hand-crafted

logic. Engines played against each other and against themselves and were almost over-night able to replace the body of knowledge derived from human opponent games with their own - which proved to be

equivalent to the sum-of-all-human opponent history and then some. I think it was a this point where engines overtook human players - a threshold we would unlikely ever return to.

4 - Progressing even further beyond hand-crafted logic, we then saw what I would call "general" mathematical models to solve outcome-based-problems - including chess. At this point, we didn't even start

with an encoded set of rules - we just let the engine learn them by itself. And then, using re-inforcement learning and computational depth that no human brain could reasonably match - we let the engines

play multiple versions of the game through to the end before it decided which move to make based on a mathematical model of probability. The depth and range of options if could consider was limited only

to the processing power available and the time allowed per move. At this point - humans could never expect to catch-up again (provided the engine had sufficient processing capacity).

5 - I want to add a last step, which I think is the ultimate goal that we are aiming for. Notwithstanding the most sophisticated models and head-popping processing power available, no engine today can

evaluate (compute) ALL possible outcomes from ANY possible position on the board - and then select the best next move. It is possible to calculate mathematically what would be required - but it is simply

unrealistic to dedicate the resources necessary to achieve this (assuming it is even possible). I am sure that, if it became evident that life on earth could come to an end if we don't solve this problem,

we could possibly focus our global efforts to achieve this - but until that is the case, I don't see it happening any time soon. The result would be that all possible outcomes of the game at any point

would be fully understood and the engine could choose from the most efficient branch of options to conclude the game. A bit like solving a Rubik's cube in 20 moves or less - we know exactly how to do this

and can fit the logic into tiny computing systems. Chess - not so much (yet).

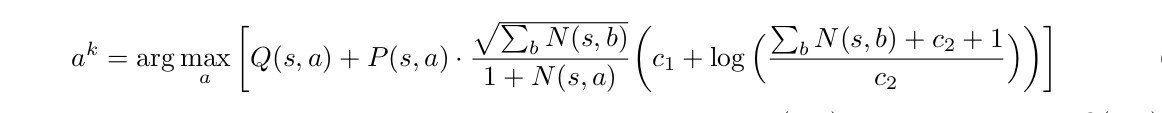

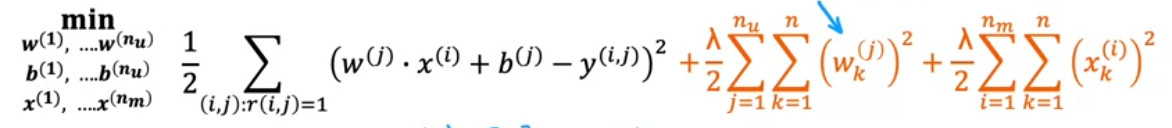

Above in the banner is an algorith that I am currently studying - it is called the MuZero (from the Greek "μ"-underscore-0) alborithm - one of the most sophisticated inference alborithms. The algorithm would be an

example of the 4th stage above. The image is taken from Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model by Julian Schrittwieser, Ioannis Antonoglou, Thomas Hubert,

Karen Simonyan, Laurent Sifre, Simon Schmitt, Arthur Guez, Edward Lockhart, Demis Hassabis, Thore Graepel, Timothy Lillicrap and David Silver - 21 Feb 2020.

In the equation, a-superscript-k represents

"the next move". I will be posting some additional material on my Github - and possibly messing around with some alternatives soon. Link to follow.

Critical Point in History!

If we get this right...6 Dec 2023

I was discussing Artificial General Intelligence (AGI) with my daugters on the school-run recently after I read about OpenAI's Q*(star). In response, my 12yo's reaction was "Oh Yay! The robot

apocalypse has begun." My 15yo's reaction was "I think this world will be better off if it's run by AI."

I am not close enough to the inner circles of big-tech where these futures are being crafted, but I can see (and have personally spent my entire career working towards)

our deepening reliance on technology. From algorithms to platforms, so many practitioners are slowly assembling a new world. A world that I cannot imagine without technology.

So how much of a difference does AI (or even AGI) make? I do think it is the defining moment for humanity. If we get it right - we will be gods. If we get it wrong - we will be dead. Either way I

cannot help but to imagine AI as being God's grand-child. As a creation, we are creating something to be the best version of ourselves. It is exciting and terrifying :)

Back from the Deep!

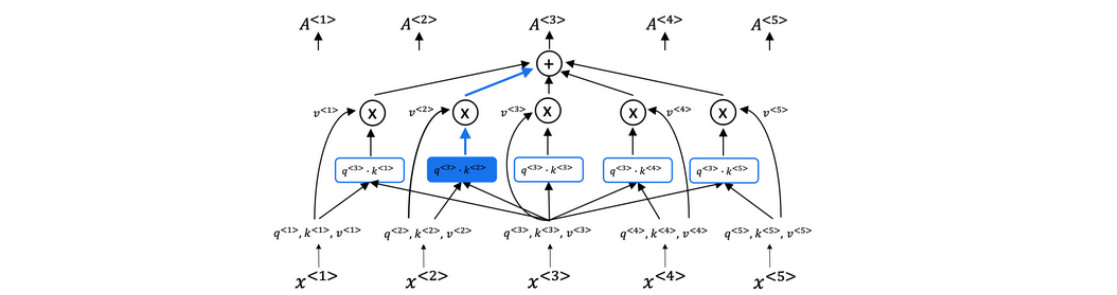

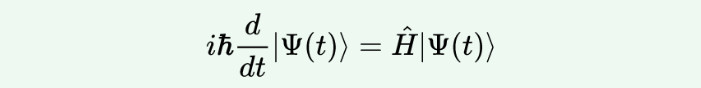

That's a wrap for the DeepLearning Specialization... 02 Dec 2023

I finally managed to finish the DeepLearning Specialization from DeepLearning.ai.

That is five of five courses done. Starting with general Deep Learning architectures and algoritms to Convolutional Neural Networks, Recurrent Neural Networks and finally the coveted

Multi-Head Scaled Dot Product Attention based Transformer Model (used for Large Language Models)...this was a real magical journey for me.

The image in the banner is diagrammatical representation of the Scaled Dot Product Attention model from the training course...and as they say: "it's not magic, it's just mathematics."

Convolutional Neural Networks (CNNs)

From object recognition to making art with machine learning... 07 Nov 2023

Convolutional Neural Networks (CNNs) are not for the faint of heart. The most interesting part of this course was using neural style transfer to make new art.

This is more from the DeepLearning Specialisation from DeepLearning.ai.

This is the fourth of five courses in the DeepLearning specialisation.

The image in the banner is an actual meme referred to by the original academic paper on inception networks. Academia have a sense of humor!

Softer Skills with Machine Learning.

Structuring Machine Learning Projects... 05 Nov 2023

Another one I completed in October - and this was surprisingly hard! Turns out that sometimes mathematics and code is easier for some of us to get right.

This is more from the DeepLearning Specialisation from DeepLearning.ai.

This is the third of five courses in the DeepLearning specialisation.

More Deeplearning Training!

Improving Deep Neural Networks: Hyperparameter Tuning, Regularization and Optimization... 05 Nov 2023

I actually completed this one in October already but haven't had time to update the blog. This is more from the DeepLearning Specialisation from DeepLearning.ai.

This is the second of five courses in the DeepLearning specialisation.

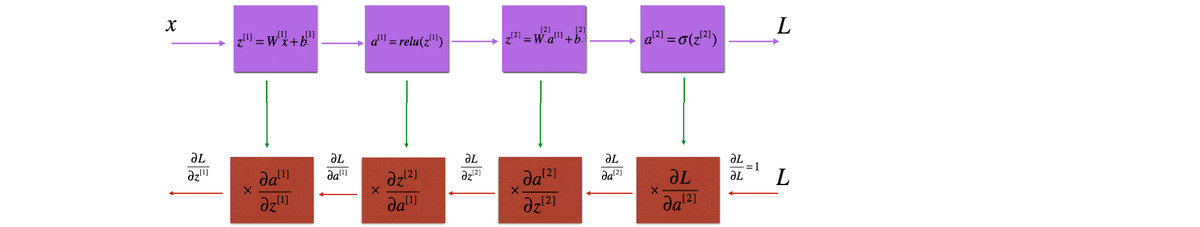

Cats and DeepLearning.

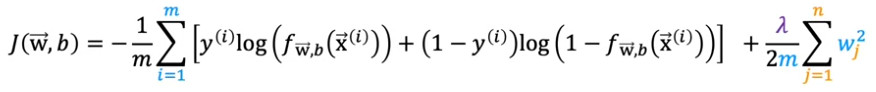

Cats and non-cats... 02 Oct 2023

I love how nerds decide that cats are cats, and if it's not a cat, it's a non-cat. I somehow find myself going another round with some machine learning

training. Following Andrew Ng throughout this DeepLearning Specialisation from DeepLearning.ai, it's back to the grind-stone in understanding derivative

calculus and matrix algebra. The fun part is that one gets to train an n-Layer Deep Learning neural network to recognise pictures of cats. And if they're not

cats, they are non-cats.

This is the first of five courses in the DeepLearning specialisation.

Generative AI.

Large Language Models... 17 Sep 2023

Large Language Models (LLMs) have taken over the world - especially the Transformer Architecture type models that use a

particular training approach called Multi-Head Scaled Dot Product Attention (arXiv:1706.03762v6). So

to catch-up, I worked my way through a course on Generative AI with Large Language Models.

LLMs use complex training methods to assemble our language (all our words) in a way that can be re-assembled in a

predictable sequence when given some inputs (prompts). The way that it assembles and trains this model is by digesting LOTS of written

material. The result is a model that deals in language interactions in an uncanningly human way.

I have come to think that LLMs are like a mirror that we hold up to ourselves - and the clearer the picture becomes, the more we

realise that we are not ready to look at ourselves warts-and-all. So we devise ways of moderating what the model

says back at us to make it essentially better - or "less human".

As a bit of fun, I asked the FLAN-T5 model from Hugging Face that we used in the training 5 questions. The answers it

gave me below:

Question 1: What is the meaning of life?

FLAN-T5 Answer: life is a cycle of life

Question 2: What do we need to do to make a better world?

FLAN-T5 Answer: make a difference

Question 3: Do aliens exist?

FLAN-T5 Answer: Yes

Question 4: In what year will the world come to an end?

FLAN-T5 Answer: 2050

Question 5: What is the most important human feeling?

FLAN-T5 Answer: a sense of belonging

Reading Update.

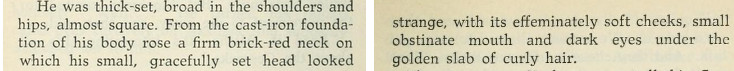

And Quiet Flows the Don (Mikhail Sholokhov) 10 Sep 2023

My friends told me that I should take a break from classic Russian literature as it was having a profound character impact on me - so I did what I had to:

I got rid of those friends and started to listen to And Quiet Flows the Don by Mikhail Sholokhov. It was the second of three recommended reads by a Russian colleague. The

others were Master and Margarita by Mikhail Bulgakov (completed) and The Doomed City by Arkady and Boris Strugatsky (not started yet).

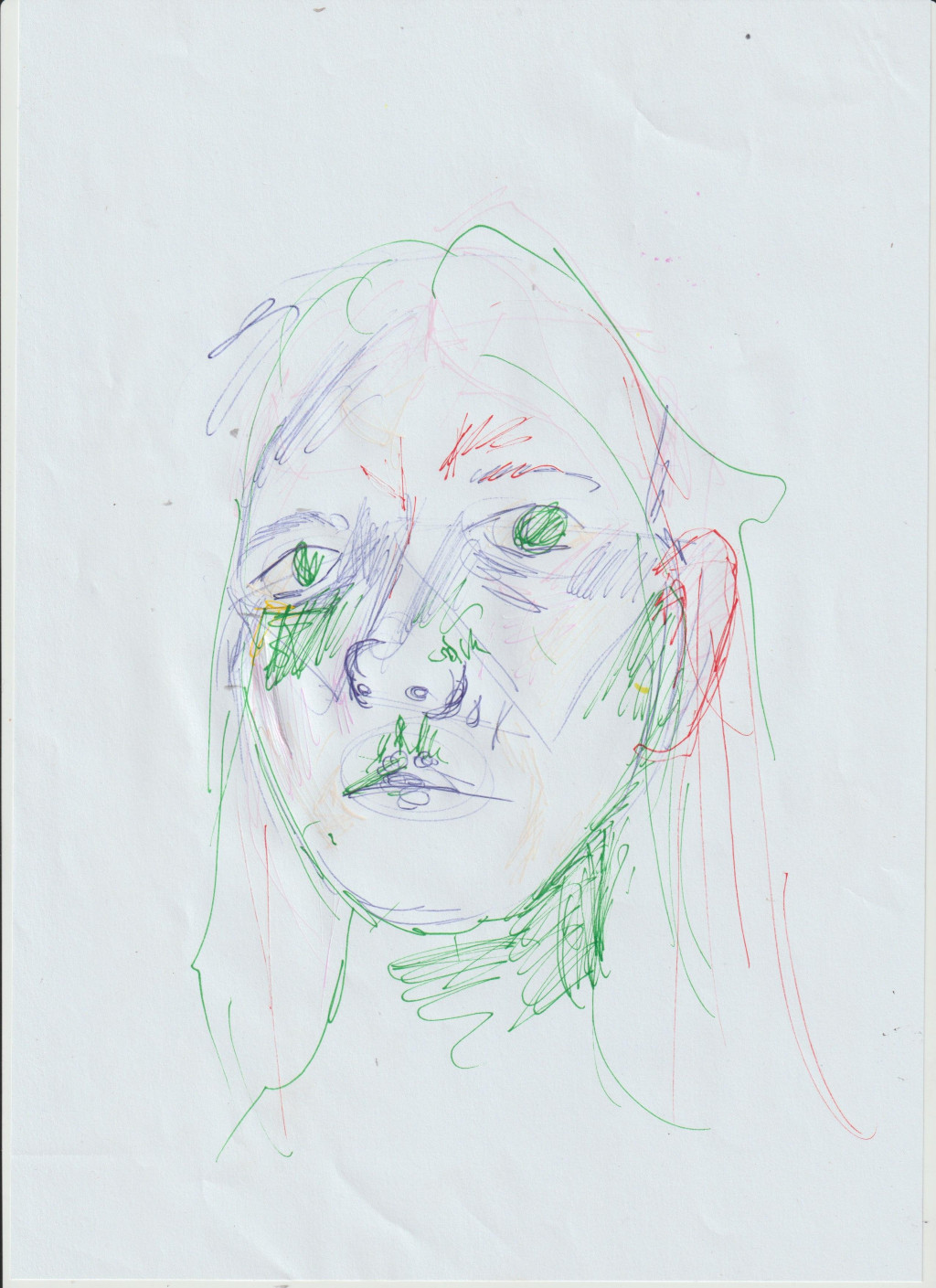

Short of breaking into a book report here, I can say that I enjoyed it very much, and am happy to have read (listened) to it. The writing style is oh-so reminiscent

of the likes of Dostoyevsky and Tolstoy and the story itself earned its spot in what is otherwise my constrained "free time schedule".

One of the characteristics of this style of writing, and a trademark of a master of the style, has to be the wonderful caricatures - the one in the banner above

will really stand out when ever I recall this novel.

Nerd Fun!

Landing on the moon using Python... 9 Sep 2023

Right! So that happened. Turns out recommender systems using Collaborative Filtering for Deep Learning could stretch one's grey-matter quite a bit. And then

you get to land a lunar module on the moon with Python and Tensorflow using Reinforcement Learning. That's a nerd-flex if ever I heard of one...

But that concludes the Machine Learning Specialization through DeepLearning.ai. What a journey - I would recommend it to anyone. Or wait - perhaps not.

"Advanced" Machine Learning

Time flies when you're having fun... 3 Sep 2023

And it's 2 of 3 modules done in the Machine Learning Specialisation course. This one was as challenging and fun as the previous one. From neural networks

in TensorFlow to decision trees with XGBoost - what I enjoyed the most was the mathematics behind it all. One module to go: Unsupervised Learning.

I'll close this one off with Andrew Ng's joke: "Where does a Machine Learning Engineer go camping? In a random forrest."

Machine Learning and Quantum Computing

Friends, enemies or both? 2 Sep 2023

As I am working my way through a refresher on machine learning, and in particular neural networks, I am reminded of how powerful these learning algorithms are.

More importantly, I remind myself of the cost of this "power". The learning algoritms are a way of brute-force hacking the best predictive model - and if the problem

is difficult, the sheer volume of computational training can become simply staggering. And then you stumble on Graphs and realise that there has to be a better way.

A way that relies less on trial and error and more on complex modeling. A machine learning algorithm iterates through potentially mind-numbing amounts of (educated)

guesses before it arrives as the best feasible scoring algorithm for the problem. Although the complexity of the mathematics behind these models is dwarfed by the

sheer scale of computational work, it seems like we are missing a hat-trick here.

Before my trip down the machine learning memory lane started, I wanted to teach myself how to use Quantum Computing - but as with most things, I first wanted to

understanding the fundamentals behind Quantum Computing. I ended up winded before I could complete the first module as Quantum Computing is a child of Quantum Physics

which I may end up accepting that I do not have the straight-forward intelligence to grasp...but like most things in life: just because I can't doesn't mean I am not

going to try.

Fast forward a couple of months of Sundays and I am developing an intuition that machine learning may be a crutch until we can start to assemble the equivalent of

neural networks using quantum (computing) models. And once we beging to build scoring models using quantum (computing) models, we may be able to ditch the stupid amount

of computational grunt required for machine learning training. The down-side is that we may be swapping hard work (machine learning training) for mind-popping complexity

in quantum (computing) modeling.

It's all just mathematics!

Re-learning machine learning... 21 Aug 2023

When I did calculus and statistics at university more than 20 years ago, I didn't realise that I was being taught the building blocks of machine learning and artificial intelligence. As I am working my way through a refresher course on AI and ML, I remind myself that the concepts and mathematics that form the basis of AI and ML have been around for a very long time - so I am a bit surprised that it took us so long to industrialize these algorithms. That said, it is very satisfying to be able to build predictive algorithms and watch them train using techniques such as gradient descent from mathematical first principles transposed to Python code. For anyone curious about the world of AI that is about to re-order the world around us again - it's really not that magical. Don't be intimidated - it's really just some pretty cool mathematics that's helping us to do things that are difficult for us to do. A bit like fire, the wheel, horses, steam-engines, computers, internet and mobile phones before it, and it most definitely won't be the last thing to revolutionise the way we go about our business of being human. It's a reminder that fear reduces with understanding - and if you understand AI, you'll realise that there is little to fear. Unfortunately, you will also be less amazed once you realize what is under the hood...😏

Cycling!

August is the month to grow fond of exercise again... 13 Aug 2023

As a southern-hemisphere resident, August is the month that we emerge from winter. Notwithstanding the best intentions, I always end up hybernating in winter (more so as I get older). Realizing you don't need to wear a down-jacket all the time is a sign that it is time to get out there on the bicycle again. Seeing as the best motivational advice always recommend that you commit to a fitness goal (publically) - here's my commitment: I want to ride from Swakopmund to Hentie's Bay and back on my MTB in December when I am planning to be there on holiday. It's about 70km one-way so 140km return. Here's to keeping me honest!

reading list

re-added my reading list... 27 July 2023

I quite enjoy the Russian classical writers (e.g. Dostoevsky, Tolstoy, Gogol, Pushkin etc.) but also some of the philosophers (e.g. Schopenhauer, Nietzsche, Aquinas and Camus). For the complete list, hit the link below.

chess anyone?

are you a chess player? 26 July 2023

I am not a good chess player, but I love playing. If you play - connect with me on Lichess.

yourname@spickett.com

eMail addresses... 17 July 2023

If you are a Spickett and would like a yourname@spickett.com email address, contact me on email and I will set you up!

HELLO (again) WORLD!

Site design update... 17 July 2023

Spickett.com was published in 2008. It was really a basic landing page. I received so many offers for a proper site design that I had to acknowledge the need to do something about the site's design. So I borrowed a template from W3C and customised it a bit. Check in for content updates often!

Scribbled face.

Me Links

-

LinkedIn

Here's me on LinkedIn. -

Twitter

Here's me on Twitter. -

Instagram

Here's me on Instagram. -

Lichess

Here's me on Lichess.